天气预报介绍

下周末有什么样的天气?未来几天出现暴风雨、雷暴和热浪的风险有多大?气象服务现在能够有效地回答这些问题。但是这些预报的背后是什么机制?本文概述了世界上许多国家的数值天气预报中心的功能,并介绍了本板块其他文章中提及的一些重要概念。

1. 介绍

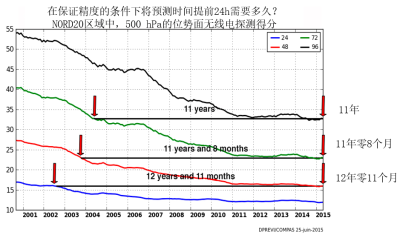

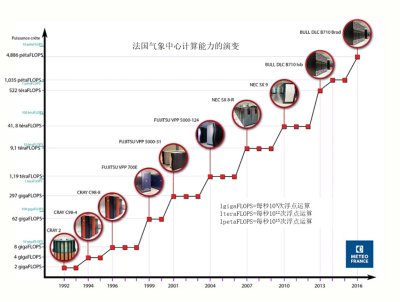

天气预报质量的显著提高是20世纪环境科学的伟大成功之一,并且在21世纪初期继续以稳定的速度发展(见图1和Bauer等,2015)。这是由于数值预报系统的进步以及对大气和相关介质状态(海洋,土壤,植被,冰冻圈)观测的数量和种类的增加,包括地球观测卫星的观测。超级计算机的快速发展是成功的关键因素之一,而计算机的发展需要科学工作的支撑。

世界上的每个国家都有一个国家气象局,其任务是定期对大气进行监测,并且为政府、工业界和公众发布预报。但只有最发达的国家才有数值天气预报(NWP)中心,在世界气象组织[1]的框架内,这些中心的产品也会分发给其他国家,以换取他国的观测数据。

在欧洲之外的主要NWP中心分布在美国、加拿大、日本、韩国、中国、俄罗斯、澳大利亚、印度、摩洛哥、南非和巴西。在欧洲仅有法国、英国和德国会做全球尺度的数值预报,其他有NWP中心的国家仅仅进行区域性预报。欧洲国家也合作开展了一个“超级中心”[2]为他们提供中期数值预报。

2. NWP中心的不同功能

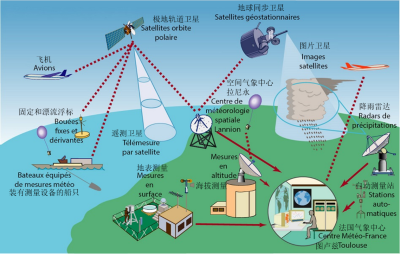

NWP中心的组织结构因国家而异,但有以下所述的一些共同的主要职能。第一个重要职能是接收观测结果。观测结果是指描述大气或有关介质状态的所有数值数据。这些观测结果各不相同(见图2),我们将特别关注以下几项结果:

- 来自世界各地的气象部门在陆地或离岸浮标上进行的地表测量;

- 用探空气球(无线电高空测候器)或气象雷达测量的海拔[3];

- 由气象卫星,或者更笼统地称为地球观测卫星(目前有15颗此类卫星)进行的测量;

- 在商用飞机或船舶上进行的测量。

这个综合观测系统花费了气象预报的大部分成本,这个成本由世界上所有国家共同承担。不同国家之间免费交换观测资料(有时在战时也不例外)这是气象学最引人注目的成就之一。

这些数据的量相当庞大(每天全球各地有数千万次观测),但是科学家们会全力确保它们能够尽快地到达NWP中心,通常情况下在观测后三小时之内就能送达。当获得观测数据时,这些中心必须有强力的远程通信和信息处理系统来接收、处理和存储它们。

第二个功能是对观测结果进行严格检查,以识别可能的错误(测量系统是否出现故障),冗余或偏移的观测结果。这通常是通过比较相邻的观测值,或比较每一个观测值与同一参数的最近预报值来实现(如果观测值与预报值差别很大,则可能被视为可疑结果,除非它能够被邻近的独立观测值证实)。在观测结果中发现的任何异常都会被上报至初始部门,之后由他们进行纠错。

第三个功能是根据多变的、异质性的一系列近期观测结果,以数学场的形式创造一个大气的“状态”,用来启动预测模型。产生预报初始状态的任务被称为数据同化(见气象资料同化)。我们的原始目标是在指定时刻制造一个尽可能接近现实的初始状态,但这是非常困难的,因为观测会受到一些微小不确定性的影响,观测与影响因素不同步且观测点并没有覆盖地球上的所有点位。因此,有必要在时空尺度上进行插值,并在考虑所有可用信息的情况下搜索“最可能”的初始状态。

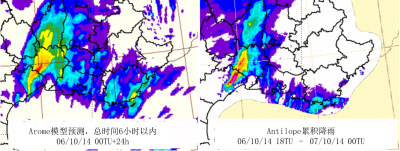

第四个功能是预报本身,由大气的数值模式完成(见天气预报模式)。该模式求解了流体力学方程并对大气连续状态进行计算,从而对 24h、48h 等时间点的大气状态进行预报。大部分 NWP 中心实际会使用多种预报模型的配置:能提前几天预测的全球适用版本;涵盖特定关注地区的一个或多个“区域”的版本,如国家领土或海外地区,这类预报提前期较短,栅格更精细。在法国气象局中,全球尺度的配置被称为 ARPEGE(Pailleux et al. 2015),区域性的配置被称为 AROME(Bouttier,2007)。

现在有一种趋势是用概率性预报(从多个略微不同的初始状态进行预报来解释初始状态中的剩余不确定性)取代确定性预报(从最可能的初始状态进行的单一预报)。这被称为集成预报(见集成预报)。根据这些不同的预报,我们可以计算出一些令人或担忧或期望的事件发生的概率。

最后,大气参数预报被用于驱动“影响模型”,以更准确地计算海洋状态、河流流量、积雪条件、雪崩风险、空气质量和道路条件等。

其它预报系统也用于截止时间较短或较长的不同预报情景:及时预报系统将观测数据外推到数小时内,并经常刷新,短期时间段内它比 NWP 模型更有效。季节预报系统每月计算一次未来3至6个月内可能出现的气候异常,参差不齐的预报质量因地理区域和季节而异(见季节预测)。季节预测模型在设计上与IPCC报告中使用的气候模型非常相似[4]。

所有预报系统的原始结果在生成时都被存储在数据库中,这些数据库不断被算法查询,算法为各种应用程序提供数据,用户可以在应用程序(如网站或手机应用)中找到他们需要的最终信息。对于最敏感的应用情景,特别涉及到人员和财产安全的事件,数据库会由一位专家预报员监督,检查几个模型的预报一致性,以及最近时间的观测结果,并决定是否触发天气预警。在法国,这主要应用于警戒地图中(见预报员的作用)。

NWP 中心的最后一个重要功能是对预报的后验验证。中心可以将预报结果与长期积累的最可靠的观测结果进行对比,并得出分数。NWP 中心每天计算大量的分数,并相互交换这些信息。使我们能够了解预报的平均质量,验证新版本的预报系统是否比前一版本有所改进,或者比较两个 NWP 中心的表现。也可以研究观测系统的发展是否能够提高预报的质量(例如,当报废的卫星被更新一代的卫星取代时)。图1是此类预报分数的示例。

由于预报质量现在已达到了一个较高的水平,因此有必要在较长时间段(几个月)内监测预报系统的变化,以确保预报精度不会降低。此外,还必须考虑不依赖于预报系统质量的大气可预测性自然变率。众所周知,一些参数在夏季比冬季更容易预报,反之亦然。但是同样明确的是大气的行为变化很大,在几个连续的冬天(或夏天)可以更容易或更难预报。这种缓变的变率是大气动力学中最令人兴奋的方面之一,但人们依旧对它知之甚少。

3. 预报的质量

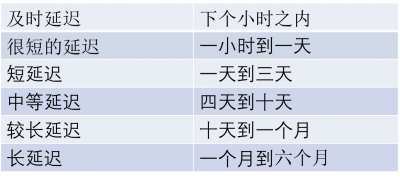

数值天气预报的质量随着考虑的参数和预报提前时间而变化(见表格)。在短时间内,通常预报温度的误差不会超过几度。除暴风地区外,预报风速的误差不会超过每秒几米。对于降雨,尤其是雷雨天气的预报质量就不会达到这种水平,因为前期数量上的微小误差会导致对降雨预报的较大误差。

即使是提前几个小时,仍然很难准确预报风暴的精确位置和与之相关的降雨量或冰雹的风险。冬季降雪量也是如此,特别是当地面温度接近 0°C 时,细微的温度误差可能导致降水性质(下雨或是下雪)不同。雾也是如此,即使只是提前几个小时也很难预报,因为它的形成取决于变化很大的湿度。至于龙卷风,只需要指出其出现的风险。

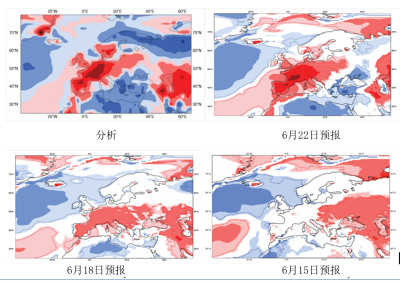

另一方面,对例如Xynthia(2010年2月27-28日晚)等严重冬季风暴的预报取得了很大进步,现在我们可以基于24到48小时的准确预报,提前72到120小时发出风险预警。政府也能够采取必要的措施去保护人民和财产安全(关闭某些交通路线、取消户外活动等)。同样,现在我们还能提前几天预报寒潮或热浪的开始和结束,并制定应对计划,且预测结果非常可靠(图3)。

月到季节尺度的预报质量仍然是不高。虽然在热带地区我们可以提前几个月预报厄尔尼诺[5]等现象,但在欧洲仍然不太可能提前几周预报气温。

4. 计算能力

预测的自由度体现在很多方面:实际使用的观测数量、观测验证/同化算法的复杂性、预报模型的分辨率(即计算格栅的精细度)、复杂的方程在模拟过程中对于细节的还原程度有多大、集成预报的规模(通常集合中有几十个模型,但在某些应用场景下可能需要增加到几百个)。最后,算力在大气预报模型和影响模型之间的分配也需要慎重考虑,以确保最佳的预报结果。

最终,预报的开始时间根据机构中心和产品的不同也有所不同,大约在播出时间前十分钟到几小时不等。

5. 研发展望

NWP系统的改进基于其研发部门,这些部门不仅彼此合作,还与大学和研究机构广泛合作。在大气过程和可预报性领域仍然存在严峻挑战,且具有明显的社会效益,公共部门应该为这一学科投入大量资源(在法国,尤其是在大学和CNRS实验室)。观测系统的发展还对技术研究起到了积极的引导作用,具有重大的工业效益,特别是在航天部门,同时也有利于地基雷达和激光雷达的研发[6]。

在目前观察到的主要研发趋势(2016)中,以下几个方面值得特别注意:

-对代码的内在改写,以充分利用新的大规模并行计算机结构(“可扩展性”的问题[7])。

-开发“耦合”预报系统,将以下一个或多个模块添加到大气动力学和物理学模型中:下伏陆表、海洋与波浪动力学、大气成分(化学组分,尤其是气溶胶)。因此,NWP模型越来越类似于气候模型,大多数国家都在追求共享NWP和气候学的发展。

-集成预报的发展现在不仅应用于大气,还应用于其他模型中(海洋和海况、空气质量、水文、积雪等)。

-卫星观测的精度和数量正在迅速变化:预计2017年将第一次在地球轨道实现对风的直接测量(ESA的ADM-AEOLUS多普勒激光雷达),预计2020年将进行第一次地球同步卫星红外高光谱测量(第三代MeteosatIRS仪器)。气象雷达测量也变得更加有效和多样化。

-来源日益多样化的与大气状态相关的间接信息正在逐渐变得容易获得(也叫做大数据范式[8])。例如,分析商用飞机的轨迹来估算风速,无线通信的中断能提供降雨信息,GPS网络提供空气湿度信息,汽车和手机现在按标准需要装备温度和压力传感器。获取这些新数据并利用它们改进预报将是近期的主要挑战之一。

-在美国和欧洲,NMS正逐步实现通过互联网免费分发原始NWP数据(见欧洲PSI局[9])。

-私营单位也开始对NWP的产品产生兴趣,认为它们能够创造利润。松下和IBM(气象公司[10])最近就这个话题进行了沟通。

表格:天气预报截止时间

参考资料及说明

封面图片:早在1922年,英国科学家刘易斯·弗莱·理查森(LewisFryRichardson)就认为有一天能够足够快地计算出大气流量并做出预测。他设想了一个计算工厂,数百名数学家将在一个“指挥家”的指导下手动计算流量![F.Schuiten,法国气象局(Météo-France)]

[1] WMO是联合国组织中的一员。

[2] 欧洲中期天气预报中心,位于英国雷丁(Woods,2005)。

[3] 气象雷达以两分钟左右的时间间隔进行三维空间扫描,使得降雨地点被定位在以80km为半径的区域成为可能。

[4] 联合国成立的政府间气候变化专家小组。

[5] 厄尔尼诺现象是每隔3至5年,在圣诞节前后发生的热带太平洋东部水域变暖现象,其会对区域活动产生重大影响。

[6] 气象激光雷达是基于雷达模型发射激光束并测量光波被大气后向散射的仪器;其使我们能够大致确定仪器周围几公里范围内的空气速度和气溶胶含量。

[7] 代码的可拓展性是它能够有效开发由大量处理器组成的计算机并行计算的能力。最高效的气象代码能够将计算分配给数万个处理器且不损失效率。

[8] 大数据是一套从互联网中传播的海量数据流中提取有用信息的方法和工具。

[9] 公共信息部门,是要求公共服务机构除有认证的例外情况外,须向公民免费提供数据的指令。

[10] 总部设在美国的气象公司(WeatherCompany)是世界上最大的私营气象公司。自2016年初以来,它一直是IBM集团的一部分。

环境百科全书由环境和能源百科全书协会出版 (www.a3e.fr),该协会与格勒诺布尔阿尔卑斯大学和格勒诺布尔INP有合同关系,并由法国科学院赞助。

引用这篇文章: BOUGEAULT Philippe (2024年3月12日), 天气预报介绍, 环境百科全书,咨询于 2025年4月5日 [在线ISSN 2555-0950]网址: https://www.encyclopedie-environnement.org/zh/air-zh/introduction-weather-forecasting/.

环境百科全书中的文章是根据知识共享BY-NC-SA许可条款提供的,该许可授权复制的条件是:引用来源,不作商业使用,共享相同的初始条件,并且在每次重复使用或分发时复制知识共享BY-NC-SA许可声明。